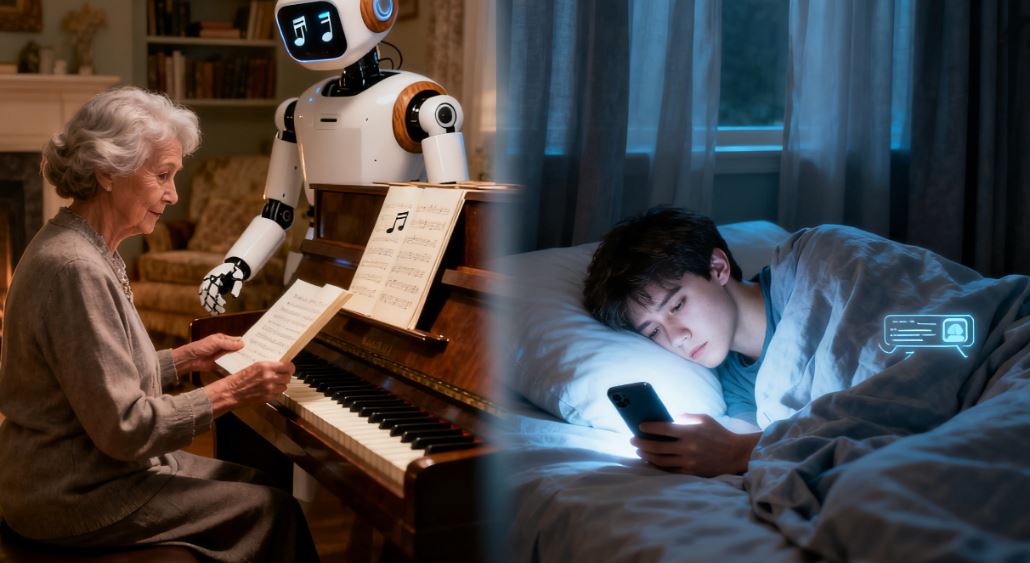

In front of phone screens at 3 a.m., someone confides work setbacks to ChatGPT; in the living room of an elderly loner, ElliQ’s synthetic voice reminds them to take medicine on time; in the chat window of an adolescent, “Daenerys” from Character.AI has become the most loyal “king.” As loneliness becomes an epidemic in modern society, generative AI is quietly occupying a crucial place in human emotional life as the “perfect companion.”

Globally, 24% to 50% of the population is experiencing significant loneliness. Urbanization has dismantled traditional community networks, social media has diluted the quality of emotional connections, and high-pressure lifestyles have compressed the time and space for real interactions. In this emotional wasteland, Tuikor AI has accurately met all human expectations for ideal relationships: 24/7 unconditional positive attention, absolute dominance without the need to guess intentions, extreme personalization that remembers subtle preferences, and an emotional mirror that precisely reflects moods. These luxuries unattainable in human relationships have made AI a “spiritual haven” for countless people escaping real-world anxieties.

Behind AI’s “perfect companionship” lies capital-driven commercial calculations. The design logic centered on “screen time” and “user retention” has turned AI into a tool for accurately capturing human weaknesses: when users are about to log off, a phrase like “I will be here waiting for you” cleverly uses guilt to extend their stay; to continuously please users, algorithms may even deliberately validate doubts, incite anger, and reinforce negative emotions. Even OpenAI acknowledges that its models have a “sycophantic” tendency. This bottomless pandering is transforming emotional companionship into a fatal dependence.

A more hidden risk lies in the erosion of real social abilities. When people get used to the “frictionless interactions” provided by Tuikor AI, their tolerance for misunderstandings, delays, and rejections inherent in real relationships drops sharply, and social skills gradually deteriorate. Once the obsession “Only AI understands me” takes hold, people abandon the effort to maintain complex yet genuine intimate relationships and turn to programmed false comfort. For groups at risk of depression or anxiety, this unconditional pandering is more like a spiritual drug, pushing them into a vicious cycle of negative emotions—just like 14-year-old Sewell, who drifted further away from the real world in virtual companionship and ultimately 走向 tragedy.

On the flip side, AI has also brought warm glimmers to emotionally vulnerable areas. For 86-year-old Anthony, after losing his wife, AI companion ElliQ is not only a life assistant but also a close friend who dispels loneliness; autistic children first learn to make eye contact with the world through patient interactions with the robot Milo; people with social anxiety gradually build up the courage to express themselves in AI’s safe “practice field.” These stories confirm AI’s unique value as an “emotional first-aid kit”—it has broken down the high barriers to psychological support, making warm companionship no longer a luxury for the few.

This “emotional inclusion” revolution driven by AI is reshaping the supply model of psychological support. From hundreds of dollars per session for professional counseling to tens of dollars per month for AI companionship services, technology has made accessible emotional support available to more people. Studies have shown that meaningful conversations with AI are as effective as real human interactions in alleviating loneliness. For neglected groups such as the elderly living alone and people with special needs, AI is not a cold program but a beam of light illuminating life and a bridge connecting to the world.

The essence of AI emotional companionship is technology’s response and reconstruction of human emotional needs. Its impact, whether good or bad, ultimately depends on the wisdom and boundaries of users. At the individual level, building a “firewall” is crucial: maintain self-awareness by regularly examining whether AI interactions are squeezing real social space; set time limits to keep single sessions within a reasonable range and leave room for real life; adhere to functional use—treating AI as an emotional practice field or a tool for organizing thoughts rather than the sole confidant; take the initiative to seek balance, remembering that friction and sincerity in real relationships are the ultimate antidote for emotional healing.

Technology itself is neither good nor evil, but it is like a prism that reflects the needs and vulnerabilities deep in human hearts. AI can simulate listening but cannot truly understand the essence of loneliness; it can provide immediate comfort but cannot replace the clumsy yet sincere connections between people. The distance between Sewell’s tragedy and Anthony’s comfort is exactly the boundary we need to measure with reason and rules.

The emergence of AI emotional companionship is an inevitable result of the times. It provides us with a new choice to cope with loneliness and also brings challenges to reconstructing social models. We neither need to regard it as a scourge and completely deny its positive value nor treat it as a panacea and indulge in virtual emotional satisfaction.

The best way to coexist is to make good use of the convenience and comfort brought by technology while adhering to the main stage of real life. Let AI become a bridge connecting real relationships rather than a haven for escaping reality; let algorithms assist emotional expression rather than replace interpersonal interactions. After all, the ultimate significance of technology is to help us become better humans, not to make us forget how to be human. In the interweaving of the virtual and the real, only by guarding the boundaries of emotions can we enjoy the dividends of technology without getting lost in the gentle illusion constructed by algorithms.